.webp)

Efficient Compute: Why Optimization Is Now Inevitable

Over the past two years, Buoyant Ventures has been digging into efficient compute, a critical intersection of climate-tech and AI. This category sits at the heart of one of the biggest industrial shifts in decades: the race to scale artificial intelligence while confronting energy, cost, and grid constraints.

We began by speaking with experts from Microsoft, MIT, UC Berkeley, Salesforce, AWS, data centers, and more. We published a blog post, hosted a webinar, and made our first investment in the category in Ocient, an efficient database software company. A year later, the story has only gotten more urgent.

Why Efficient Compute Matters

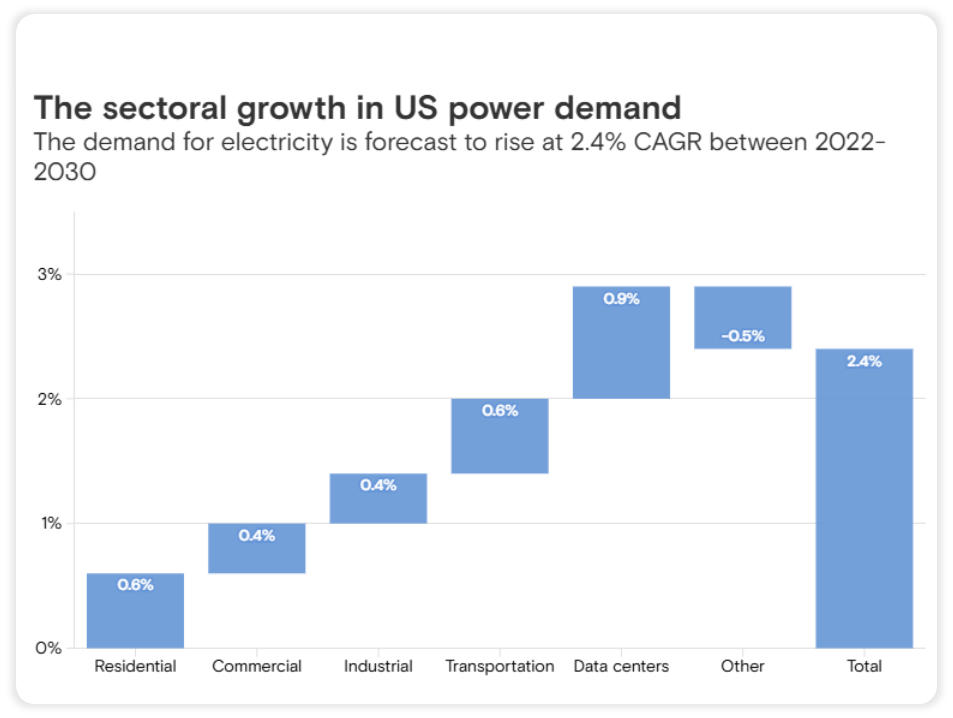

The reason is simple: AI is driving unprecedented electricity demand. The scale of this transformation is difficult to overstate. Data centers consumed about 4.4% of total U.S. electricity in 2023 and are expected to consume approximately 6.7% to 12% of total U.S. electricity by 2028, potentially tripling their share in just five years. Data center electricity use is expected to outpace every other sector, including electrification of transport and onshoring of manufacturing. This projected load growth is especially striking given that demand has been essentially flat for decades.

Globally, the picture is equally dramatic. Data centers accounted for roughly 1.5% of global electricity consumption in 2024 and are projected to double by 2030. The United States and China are the most significant regions for data center electricity consumption growth, projected 130% and 170%, respectively.

The Grid Bottleneck

The challenge isn't just about generating more power, but it's about delivering power where it's needed, when it's needed. The grid has become the fundamental bottleneck to bringing new data centers online. Utilities face up to 7 year backlogs for new power connections, a timeline that's incompatible with the speed of AI development and deployment.

The infrastructure constraints are multifaceted. Permitting processes, siting challenges, and equipment shortages already put approximately 20% of data center projects at risk of delay by 2030. Even well-funded developers with land and capital can find themselves stuck in the interconnection queue.

This is where ambition collides with reality. The grid has become the limiting factor for AI.

Training vs. Inference

Not all AI workloads are created equal, and understanding this distinction is crucial for addressing the energy challenge. Training massive models like GPT-4 represents the headline-grabbing end of the spectrum: resource-intensive endeavors costing an estimated $100 million and requiring 30 MW of power. However, training jobs offer some flexibility. They can often be shifted to off-peak hours, moved to regions with abundant renewable energy, or scheduled around grid constraints.

Inference presents a fundamentally different challenge. It's the always-on reality of AI deployment. When a user prompts ChatGPT, they expect an instant response. This creates constant, latency-sensitive demand that's difficult to time-shift or optimize around grid conditions. However, there is a spectrum. Batch video generation or bulk document summarization, for example, can often be scheduled like training, but conversational AI, robotics, and other interactive applications demand instant responses.

As AI spreads into everyday workflows and tools, inference will grow exponentially and quickly outpace training as the dominant driver of demand.

Powering the AI Future

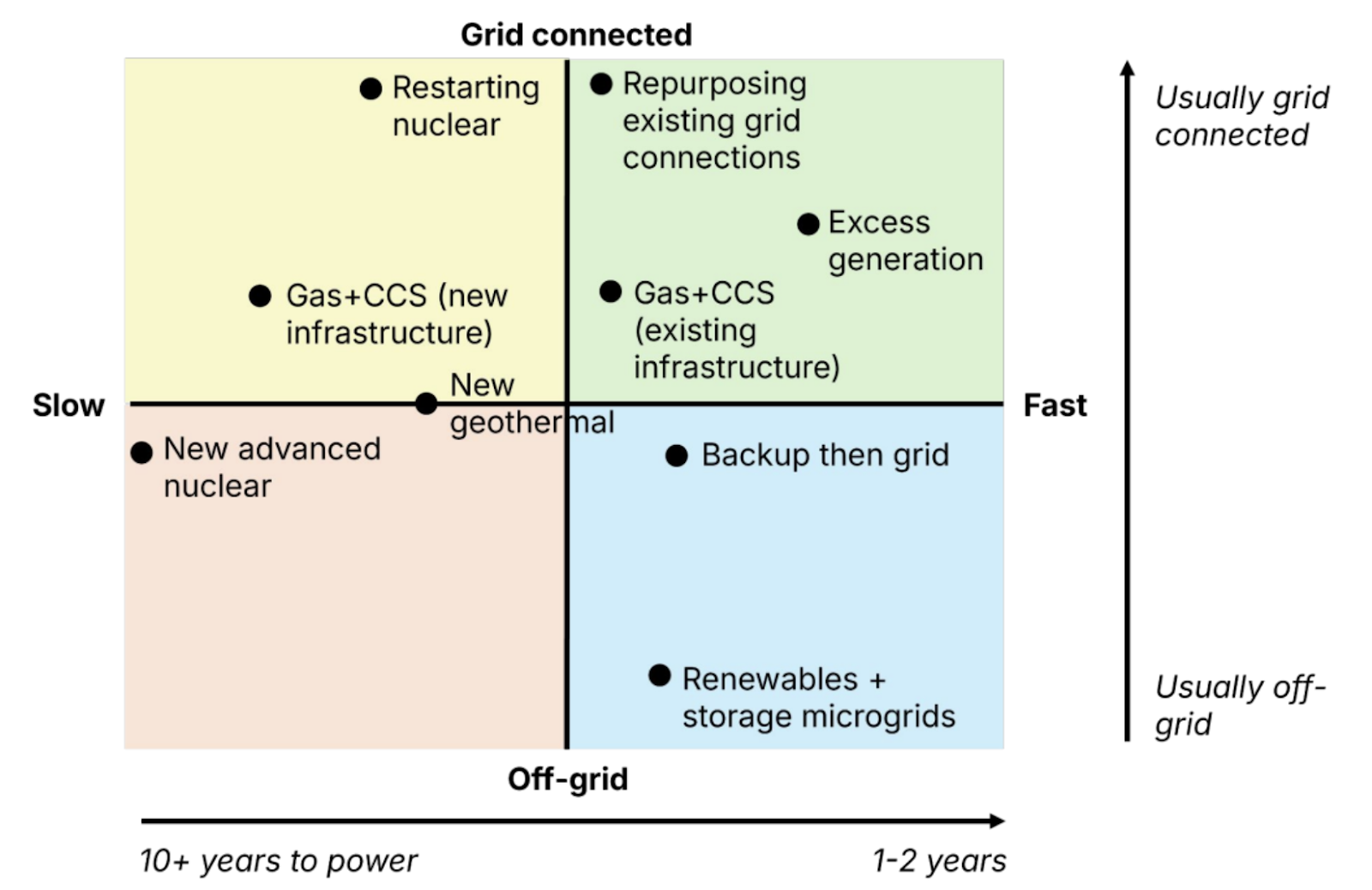

Meeting this surge in demand requires an "all of the above" energy approach, but each pathway faces significant constraints that limit its ability to solve the challenge independently.

- Natural Gas: Familiar territory for utilities as reliable baseload and peaker plants, but deployment is increasingly constrained by supply chain bottlenecks. Gas turbine lead times have stretched to approximately 5 years, putting new capacity deployment well behind the timeline needed for AI infrastructure growth. This has caused gas turbine manufacturers such as Mitsubishi to expand capacity, though this does not solve the problem in the near term.

- Nuclear (Small Modular Reactors): Promising as a reliable, always-on solution that could provide the consistent power AI workloads demand. However, commercial SMR deployments aren't expected until 2030 and beyond, creating a significant timeline mismatch with immediate AI infrastructure needs. Cost may also be prohibitive. This isn’t stopping data center developers like Equinix from signing deals with nuclear firms.

- Solar + Battery Storage: Solar is the most attractive option on both cost and deployment speed metrics. However, batteries aren’t yet a full replacement for diesel backup at data centers. Most battery systems today provide only two to four hours of power, while mission-critical facilities plan for outages that could last a day or more. Add in high upfront costs, space requirements, and reliability concerns, and diesel remains the default option. Over time, as long-duration storage technologies mature, batteries may begin to displace diesel, but for now they play a supporting role rather than a standalone solution.

- Virtual Power Plants (VPPs): Touted as a distributed solution that could aggregate diverse energy resources to meet peak demand. While promising in theory, VPPs face substantial utility integration challenges and regulatory hurdles that limit their ability to scale quickly enough to address immediate needs. Voltus and Cloverleaf Infrastructure are tackling this issue with VPP programs for hypescalers, expected to come online by 2027.

The below chart from Sightline Climate breaks down the different paths to power across speed and grid connectivity. The harsh reality is that every pathway faces significant implementation hurdles, and none can meet the AI energy challenge alone.

This constraint-driven environment is what makes efficient compute not just valuable, but inevitable. Related, there is a growing conversation about data center flexibility. Three themes are emerging:

- Smarter internal power flows – Redesigning how electricity moves within the facility to increase overall utilization.

- Software-driven flexibility – Using software to time-shift certain workloads or draw on on-site backup generation when the grid is constrained.

- Unlocking stranded capacity – Data centers are built to meet rare peak demand events but typically operate at those levels only a few hours each year; software can help tap into that unused capacity.

Increasing Cost Pressures

For years, AI compute costs benefited from hardware efficiencies and software optimizations, a digital extension of Moore's Law. As that reality is slowing, the economics of AI are fundamentally shifting as well.

Energy costs, while still a relatively small percentage of total compute expenses today, are becoming non-negotiable and increasingly material. As inference scales and energy prices rise in response to constrained supply, power consumption will shift from an availability issue to a major cost driver that directly impacts AI deployment economics.

Hardware costs remain the largest expense category, but the trajectory is concerning. With tariffs expected to push AI infrastructure costs higher and supply chain constraints persisting, the pressure to optimize across all dimensions (hardware efficiency, software optimization, and energy consumption) will only intensify.

The Shift in the AI Race

In 2024, the AI industry operated under a "growth at all costs" paradigm. The focus was primarily on chip-level efficiency gains, optimizing training workloads, and exploring co-location opportunities to reduce latency and costs. Success was measured by model size, parameter counts, and computational speed.

This year, the tone has shifted. System-level efficiency, or how every layer of the AI stack fits together, is becoming a big focus. DeepSeek’s breakthrough earlier this year was a wake-up call by proving that cutting-edge models could be trained at a fraction of the cost without using the most advanced chips. And just recently, the company introduced a model that slashes inference costs by up to half, proving how valuable software architectural innovation can be. Hardware manufacturers and hyperscale cloud providers are prioritizing inference cost reduction, recognizing that the company that can deliver inference at the lowest total cost, combining both energy and hardware efficiency, will capture the largest share of the expanding AI market.

Where Buoyant Is Focused

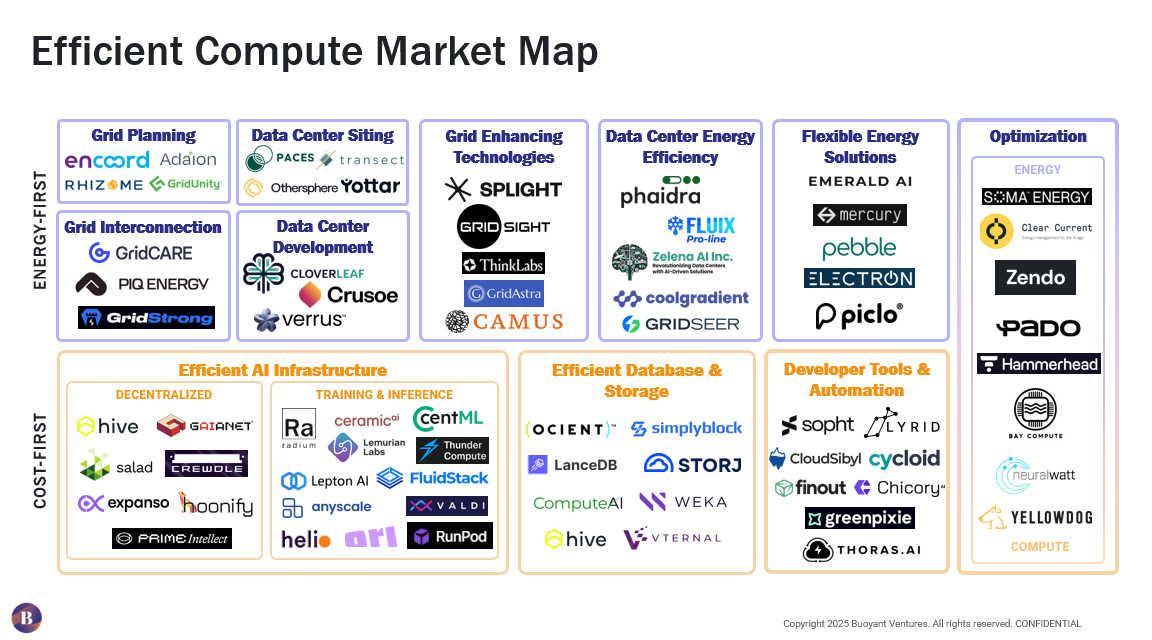

At Buoyant, we see these constraints as opportunity. Our Efficient Compute Market Map below organizes this emerging landscape along two primary axes:

Energy-First Solutions:

- Grid planning and interconnection software aimed to address speed to power.

- Developers and technologies focused on data center development, with a focus on interconnection or on-site generation

- Grid-enhancing technologies that expand capacity of today’s grid

- Flexible energy solutions that can respond to grid signals and congestion

- Optimization across data center operations, including HVAC or energy resources

Cost-First Solutions:

- Efficient AI infrastructure that optimizes training and inference workloads to lower cost and energy use

- Developer tools that help end users manage costs and improve GPU utilization

- Databases and storage solutions designed for AI workloads

- Compute or workload optimization tools that balance performance and resource consumption

We are particularly focused on three high-impact categories where we see the greatest potential for transformative innovation:

1. Efficient AI Infrastructure: Novel approaches to optimize both training and inference workloads, including specialized software architectures and hybrid deployment models.

2. Developer Tools: Platforms and solutions that help teams manage costs and GPU utilization regardless of scale.

3. Energy and Compute Optimization: Predicting and balancing AI workload demand to avoid wasted resources.

The Long-Term Opportunity

Efficient compute sits squarely at the intersection of AI and climate: two of the most powerful themes shaping this decade. As models multiply and adoption spreads, the energy challenge will only intensify.

Efficiency is no longer a nice add-on. The companies that can drive systematic efficiency improvements across the entire AI stack will define the next wave of technology winners in a resource-constrained world.

Are you building a company in the efficient compute category? We'd love to talk with you about how Buoyant Ventures can support your mission.